Stories App

Stories App enables journalists to streamline the creation process for video-based social media content.

The app provides branded templates with video editing capabilities. It accomplishes this with zero buffering and offline support. All video projects are also auto-saved and accessible for additional editing at a later time.

I was the engineering manager and lead on the project.

Technical Highlights

- iOS app built with Swift

- UI built with SwiftUI

- Video processing with AVFoundation and Core Animation

- Apollo Client for GraphQL integration

- Deployed with Github Actions and Fastlane

- Early prototype built with FFmpeg and Kotlin Multiplatform

Features

- Record or import videos

- Trim, split, stitch, mute, and re-order videos

- Library of editable, responsive and animated templates

- Templates are remotely configured

- Live preview during editing with zero buffering

- Offline support for editing and rendering

- Auto-saves projects locally so they can be accessed later

The Camera

The camera uses UIViewRepresentable to construct a custom SwiftUI view that ties an AVCaptureSession to an AVCaptureVideoPreviewLayer to present the camera feed.

The majority of the controller logic for constructing the AVCaptureSession is based off of Apple's AVCam.

The camera also allows multiple gesture types including tapping to adjust focus as well as simultaneously supporting two methods for adjusting zoom level. This was implemented using custom view modifiers and a custom focus indicator.

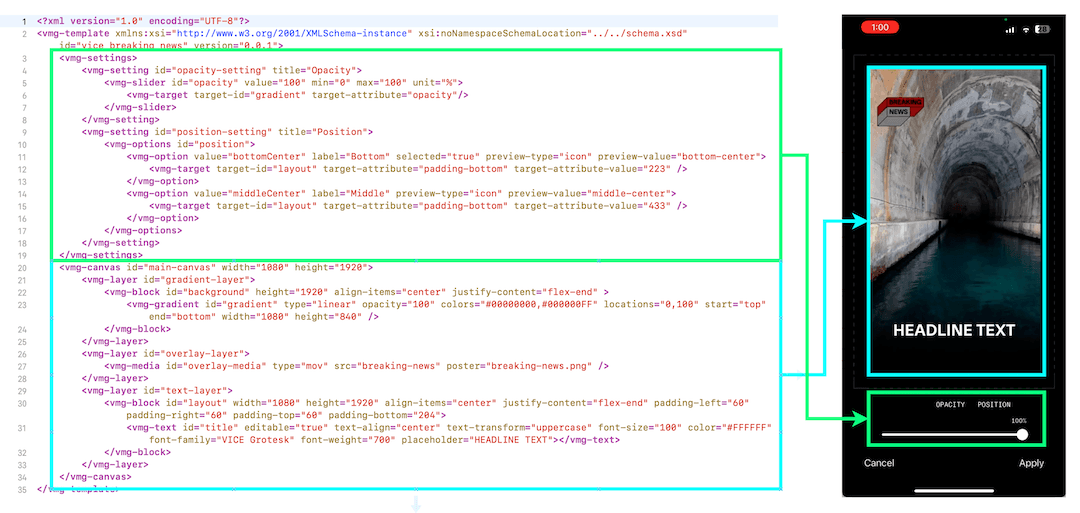

Template Data Format

Templates aren't bundled with the app. Instead, we designed data structures to represent our templates that could be communicated over an API. This data would later be used to dynamically render templates and the UI needed to edit them.

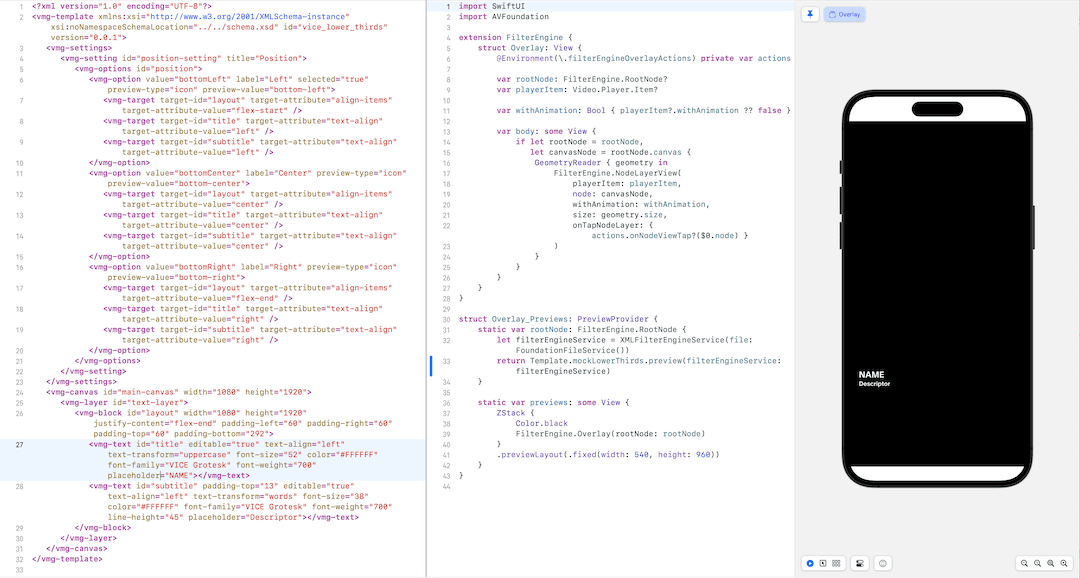

The data format is XML and is influenced by HTML.

During development, it was common that the engineers would build these templates locally. SwiftUI's preview feature made it possible to rig up the entire template parsing and rendering process and allow the team to verify that the code was generating visuals identical to our designs.

Template Rendering

Once the user selects a template, it's corresponding template data is parsed and an in-memory representation of it is constructed as a tree of "nodes". Whenever a template needs to be rendered on the screen, the app traverses the tree and constructs a hierarchy of CALayers.

CALayer is low-level enough that the app can use the same underlying CALayer structure across all use cases within the app. In contrast, if we were to display the templates using UIView, the app could display templates in the user interface but would not be able to use the same structure to render the templates into the final video while exporting.

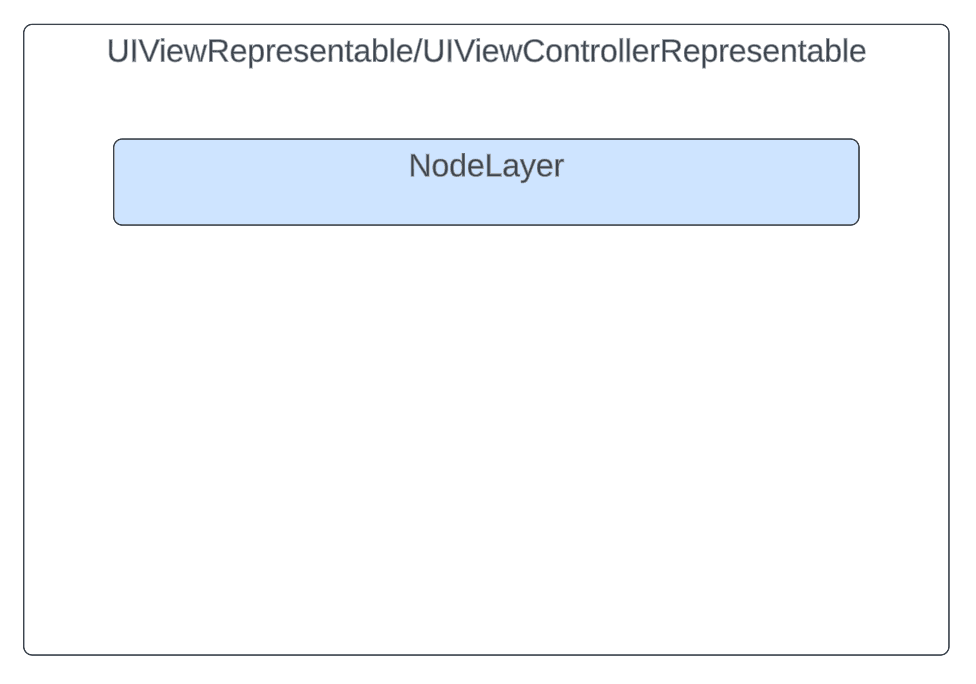

In the following examples, the blue NodeLayer object represents the entire CALayer structure for any template. This is to highlight code re-use.

Displaying a template in a view

The root CALayer can be simply added as a sublayer to any UIView. Additionally, when a user taps the UIView, CALayer.hitTest is used to determine which CALayer was tapped. This is useful as some template elements are expected to be interactable.

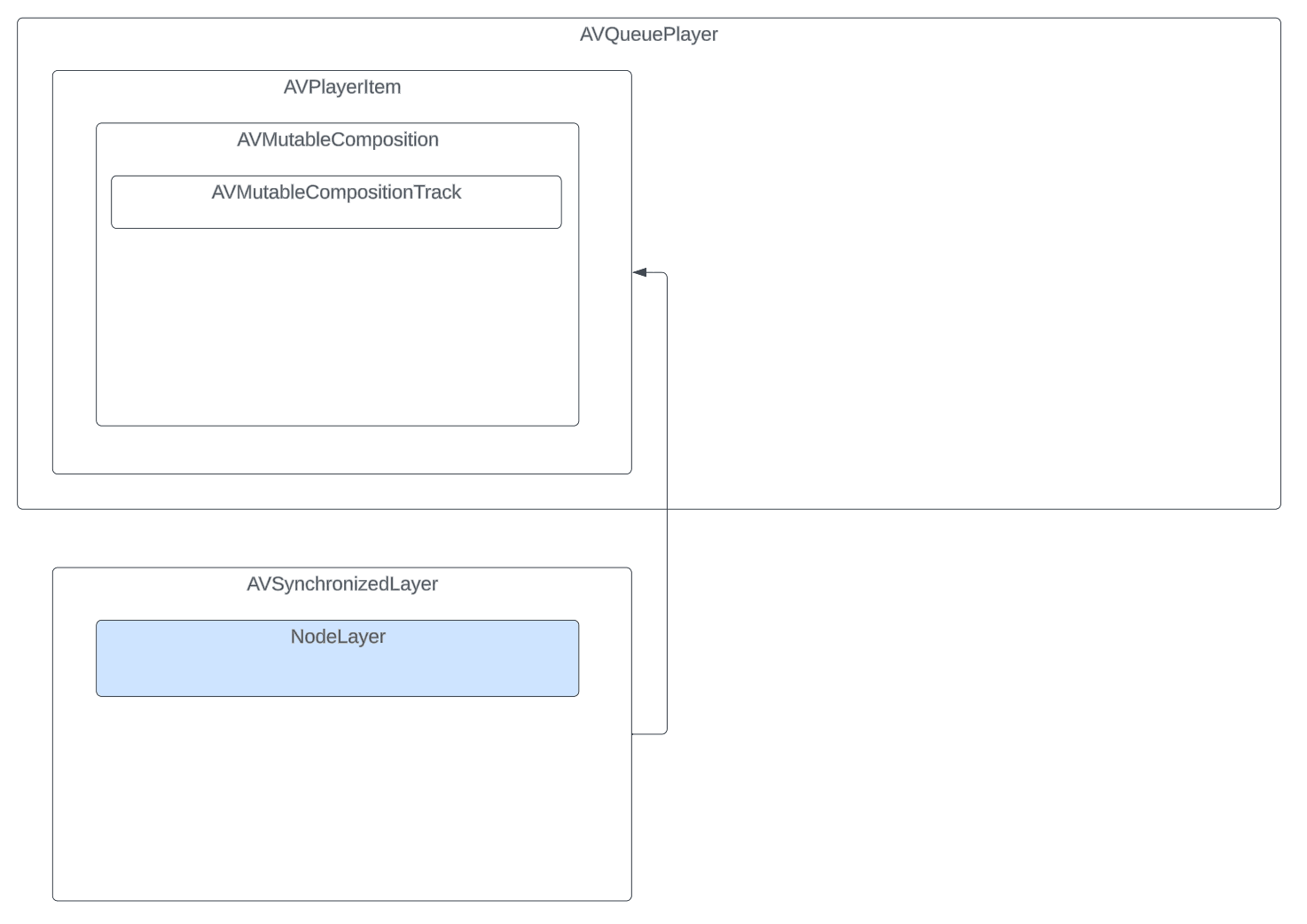

Rendering a template during an export

When the user is finished editing, the app uses an AVAssetExportSession to export the final product.

The export session has a fairly complicated configuration, but ultimately it allows you to overlay any number of CALayers over your video using AVVideoCompositionCoreAnimationTool. It even syncs any CAAnimation animations that are tied in so that they play along with your composition. AVVideoCompositionCoreAnimationTool is not shown in the diagram below, but it is what ties the AVMutableVideoComposition to the NodeLayer.

Playing a template within a video player

Since AVPlayer plays AVPlayerItems and AVPlayerItems can be given an AVComposition, we can conceptualize the idea of simply re-using most of the same logic to construct an AVMutableComposition that we used for creating an AVAssetExportSession (above).

However, AVVideoCompositionCoreAnimationTool doesn't work in this context (If I recall correctly, the app will crash), so we need an alternative way to render the user's templates. This is where AVSynchronizedLayer comes in. AVSynchronizedLayer simply ties a CALayer to an AVPlayerItem. In this case, the CALayers aren't configured directly in the AVMutableComposition. Instead, they are overlayed over the AVPlayer (or AVQueuePlayer) and played in parallel by AVPlayerItem and the user can't tell the difference.

This is also how the app achieves it's live preview with zero buffering.

Custom Layout Manager

The editing capability provided by the template system means that a user can impact the size and position of the elements within the template. For example, adjustments to text, font family and font size all effect the bounding box of the text's corresponding CALayer. Futhermore, this has a cascading effect on the expected positions of neighboring elements.

Since CALayer.layoutManager doesn't exist on iOS, LayoutLayer was created by extending CALayer, a base class that any template element can inherit from to automatically support alignment, padding, and auto-resizing.

As the user interacts with the app, Combine is used to observe changes to the underlying template data and adjust the properties of LayoutLayer to provide real-time visual updates.